On February 12, 2026, Xiaomi officially released its first open-source Robotic VLA (Vision-Language-Action) model, named “Xiaomi-Robotics-0”. With 4.7 billion parameters, this model combines visual-language understanding with high-performance real-time execution capabilities, setting new SOTA (State of the Art) records across multiple benchmarks.

Here are the key technical features and capabilities of the model:

Architecture: Brain and Cerebellum Collaboration

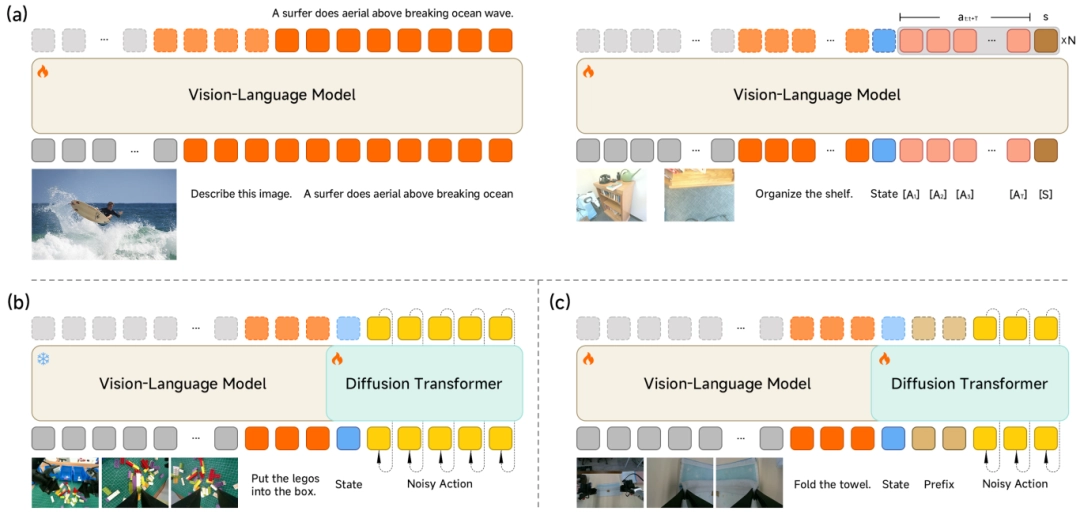

To balance general understanding with precise control, Xiaomi-Robotics-0 utilizes a “Mixture-of-Transformers” (MoT) architecture.

-

Visual-Language Brain (VLM): Built on a multimodal VLM base, it is responsible for understanding vague human commands (e.g., “please fold the towel”) and capturing spatial relationships from high-definition visual inputs.

-

Action Execution Cerebellum (Action Expert): To generate high-frequency and smooth movements, a multi-layer Diffusion Transformer (DiT) is embedded. Instead of outputting a single action, it generates an “Action Chunk” and ensures precision using flow-matching technology.

Training Strategy: Preventing “Dumbing Down”

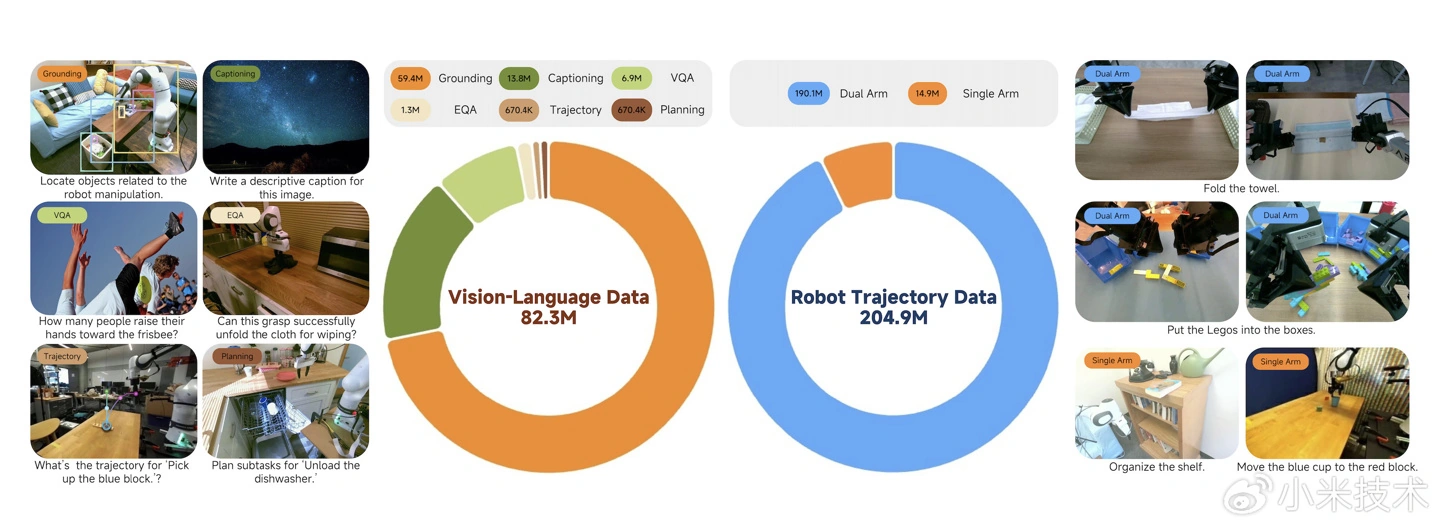

Many VLA models tend to lose their general understanding capabilities while learning actions. Xiaomi addresses this with a hybrid training method combining multimodal data with action data:

-

VLM Synergistic Training: An “Action Proposal” mechanism forces the VLM to predict action distributions while understanding images, aligning the VLM’s feature space with the action space.

-

DiT Specialized Training: The VLM is frozen, and the DiT is trained to recover precise action sequences from noise, relying entirely on KV features for conditional generation.

Real-Time and Fluid Movements

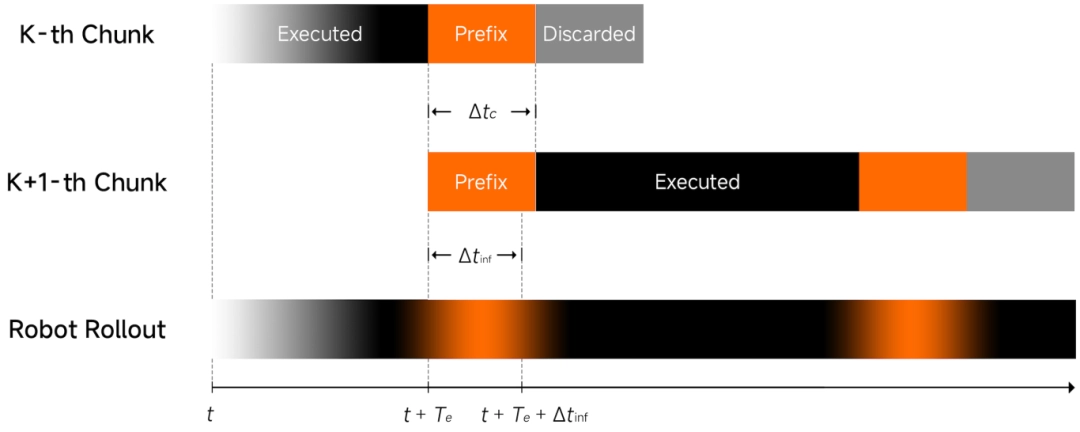

To solve the “action stuttering” caused by inference latency in real robots, the team introduced innovative techniques:

-

Asynchronous Inference: Decouples the model’s reasoning process from the robot’s execution, allowing them to run asynchronously for smoother operation.

-

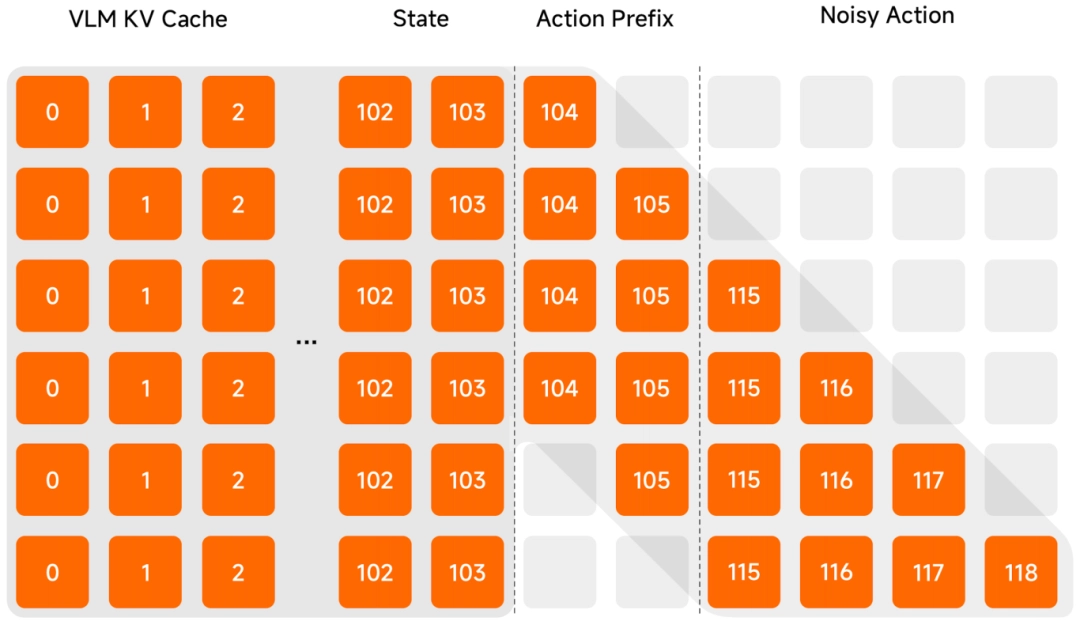

Clean Action Prefix: Uses the previously predicted action as input to ensure trajectory continuity and reduce jitter.

-

$\Lambda$-shape Attention Mask: A special attention mask forces the model to focus on current visual feedback rather than historical inertia, making the robot highly responsive to sudden environmental changes.

Performance & Availability

-

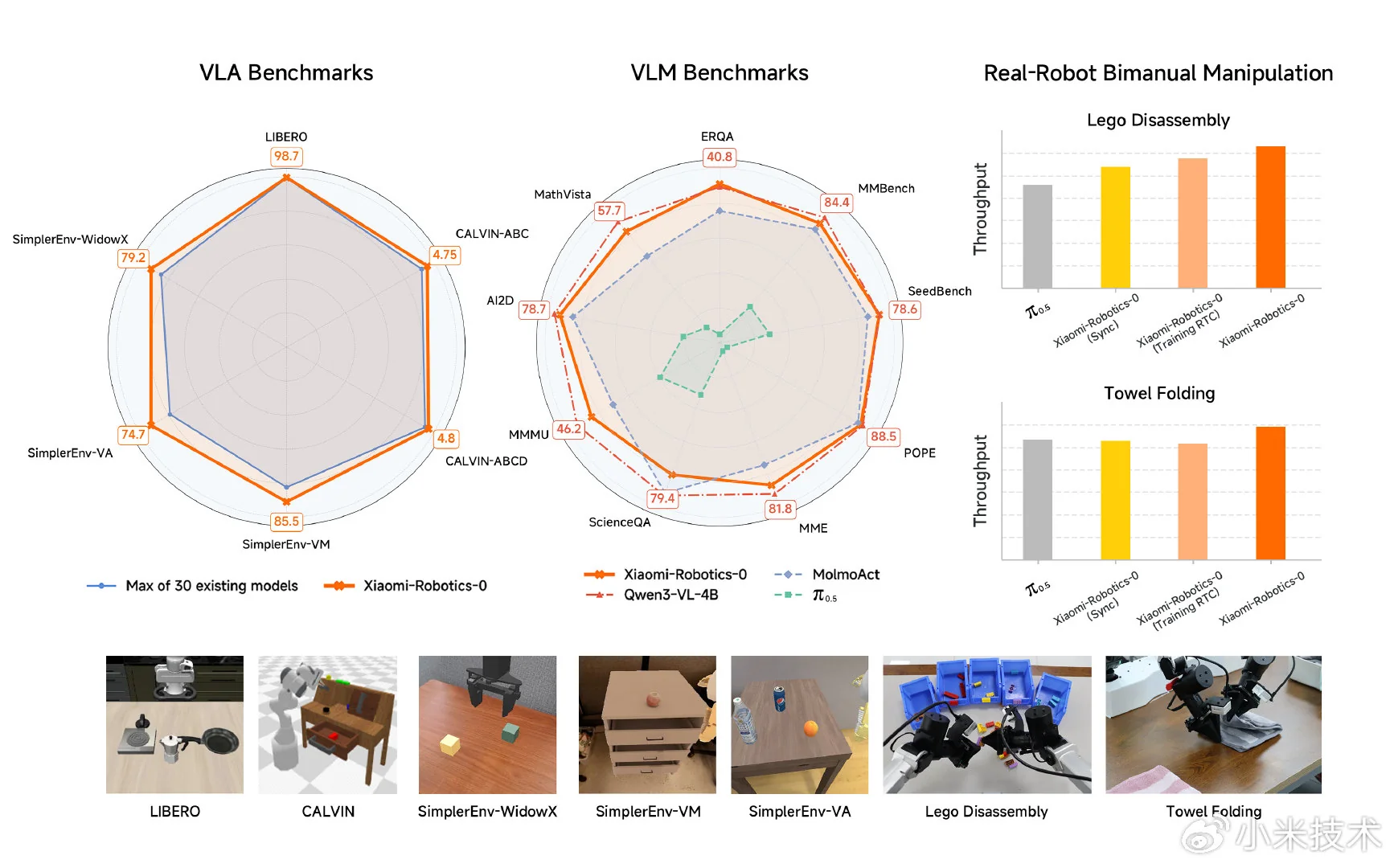

Benchmark Leader: The model achieved top results among 30 models in simulation benchmarks like LIBERO, CALVIN, and SimplerEnv.

-

Real-World Challenges: In tests with dual-arm robots, it demonstrated superior hand-eye coordination in long-horizon tasks like dismantling blocks and folding soft towels.

-

Hardware Compatibility: It supports real-time inference on consumer-grade graphics cards.

Xiaomi has made the project page, source code, and model weights available to the public:

- Project Page: https://xiaomi-robotics-0.github.io

- Code: https://github.com/XiaomiRobotics/Xiaomi-Robotics-0

- Model Weights: https://huggingface.co/XiaomiRobotics

Emir Bardakçı

Emir Bardakçı