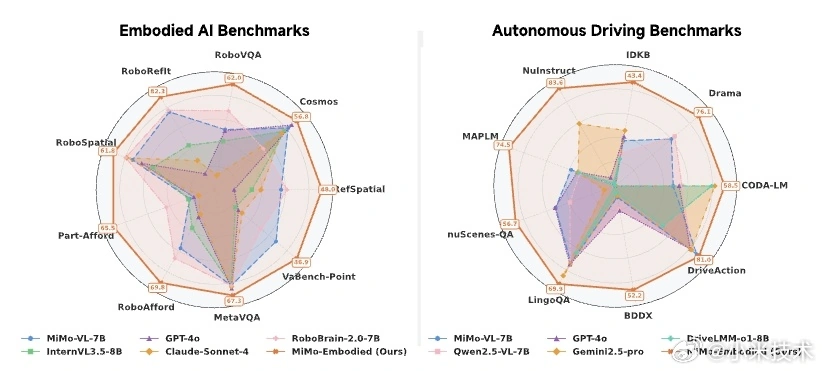

Representing a milestone in the development of Xiaomi’s intelligent ecosystem and the broader AI research community, Xiaomi officially released and fully open-sourced its MiMo-Embodied large-scale embodied model. With increasing embodied intelligence at home and wider acceptance of autonomous driving, Xiaomi now intends to overcome a long-standing challenge: developing cognitive and capability interoperability between indoor robots and outdoor vehicles. Using MiMo-Embodied, Xiaomi introduces a unified foundation model that advances autonomous driving, embodied intelligence, and general AI capabilities into a single framework.

What Makes MiMo-Embodied a Breakthrough?

According to Xiaomi, MiMo-Embodied is the first in the industry in terms of an embodied foundation model to bridge the gaps between autonomous driving and embodied intelligence. This model unites task interpretation, perception, and decision-making in both home and mobility scenarios, establishing a sound scientific basis for future AI systems across domains. This move marks an extension of Xiaomi’s ambitions beyond consumer electronics into more advanced robotics and large-scale automation.

Key Technical Principles Behind the Model

MiMo-Embodied incorporates a deep architectural design to support multi-scenario cognition. The model focuses on the harmonization of embodied tasks, which include spatial reasoning and task planning, with driving tasks like perception and trajectory generation. This unified processing approach empowers Xiaomi to move from specialized vertical intelligence toward scalable, cross-domain intelligent collaboration.

Three Key Features of Technology

Broad Cross-Domain Capability Coverage

The model jointly supports three crucial tasks in the realm of embodied intelligence, affordance reasoning, task planning, and spatial understanding, with three core tasks of autonomous driving: environment perception, state prediction, and driving planning. These capabilities together form a complete scenario intelligence covering home environments, indoor robotics, and real-world traffic.

Two-Way Collaborative Intelligence

Xiaomi highlights that MiMo-Embodied allows knowledge transfer between indoor robotics and autonomous driving. During the testing of the system, they showed that improved decision-making skills in home robotics can improve road-driving performance and vice versa. The bidirectional enhancement creates a new framework for integrated smart systems.

Full-Chain Optimization for Real-World Deployments

To make sure deployment will be reliable, Xiaomi designed a multi-stage training pipeline including capability learning, Chain-of-Thought (CoT) inference enhancement, and reinforcement learning (RL) fine-tuning. This approach strengthens real-environment robustness, making the model suitable for complex, dynamic scenarios.

Performance Across 29 Benchmarks

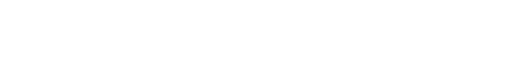

Xiaomi claims that MiMo-Embodied outperformed leading open-source, closed-source, and proprietary models on **29 critical benchmarks.

Results of Embodied Intelligence

It realized state-of-the-art performance on 17 benchmarks, demonstrating exceptional skills in task planning, spatial understanding, and affordance prediction.

Results of Autonomous Driving

It achieved state-of-the-art results in 12 benchmarks, ranging from perception to state forecasting to driving planning, setting a new bar for multi-stage driving intelligence.

General Visual-Language Understanding

MiMo-Embodied also demonstrated advanced generalization in visual-language tasks, confirming its versatility across broader AI domains.

Xiaomi Fully Opens the Model to Developers and Researchers

The model and codebase of MiMo-Embodied have been opened up by Xiaomi, reinforcing the commitment of the company to transparent and collaborative research. Developers can explore, adapt, and build on the model through the official repositories. By opening up MiMo-Embodied, Xiaomi wants to accelerate innovation within the space of intelligent robotics, smart mobility, and connected ecosystems.

Emir Bardakçı

Emir Bardakçı

👍

axbhxjd just a bit better than the other