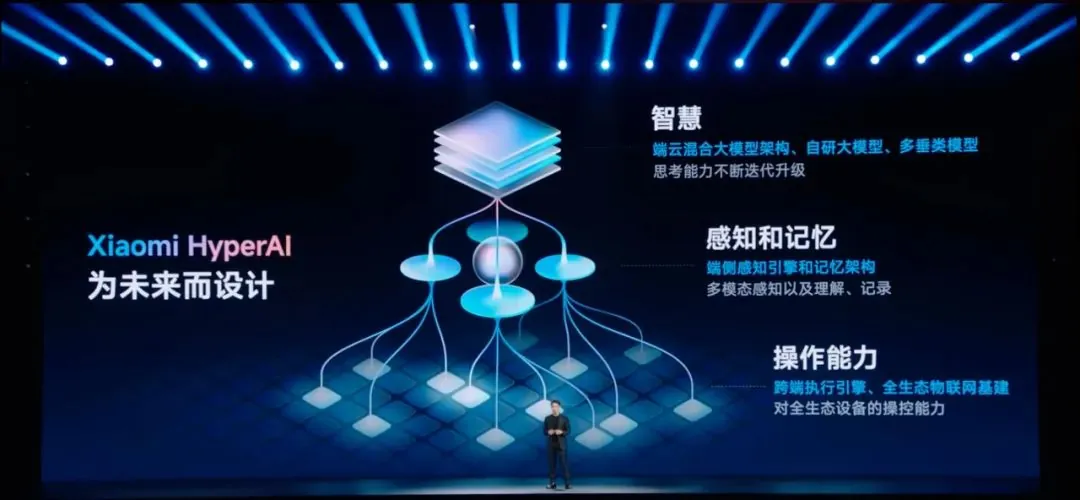

After years of AI development, Xiaomi’s latest update on the second-generation large language model, MiLM2, presents a better version in terms of model architecture, data quality, and application scope compared to the first generation, MiLM, which was released in 2023. Sitting at the core of realizing an intelligent and interconnected environment-as Xiaomi aims for a “Full Ecosystem for People, Cars, and Homes”-is MiLM2.

Key Upgrades in MiLM2

Nevertheless, the long and short of it is that Xiaomi’s MiLM2 has been improved upon to suit diverse needs across mobile devices, vehicles, smart homes, among many other spheres. Core enhancements that make MiLM2 stand out include:

- Expanding Parameter Matrix and Scalability: It can support anything from 0.3 billion parameters, which are lightweight enough for on-device usage, up to 30 billion parameters with robust cloud tasks. This flexibility in scale will enable Xiaomi to deploy MiLM2 across devices and use cases, from smartphones to cloud-based services, to improve usability and efficiency. It has optimized the range of parameters for seamless integration across the cloud, edge, and end devices and has realized smooth continuity of AI capabilities across all levels of the Xiaomi ecosystem.

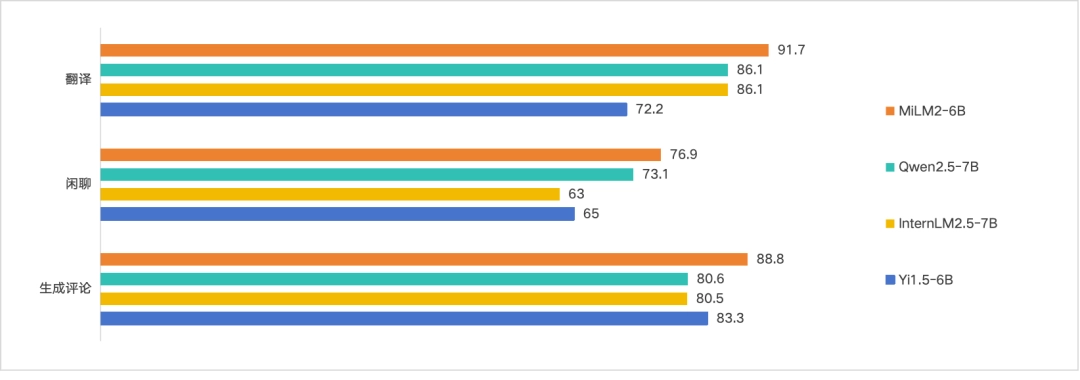

- Improved Performance Across Key Capabilities: Compared to the first generation, MiLM2 realizes a 45% average improvement on 10 key capabilities including command following, translation, and conversational abilities. The latter brings MiLM2 to the leading edge of industry performance and hence highly competitive with other models of comparable size. That has dramatically enhanced performance on key tasks such as command recognition, dialogue management, and translation, further cementing its position as a smart assistant, and supporting Xiaomi’s vision of an intelligent ecosystem that serves consumers.

- More On-Device Use Inference Acceleration: MiLM2 solves three major clients: large and small model speculation, BiTA, and Medusa by speeding up model inference at the client side. Its self-developed quantization at Xiaomi makes a considerable contribution to reducing its quantization loss by 78%, when compared with industry standards; hence, it is better placed on low-computation-power devices. Enhancements create a better and faster user experience of AI smartphones and IoT devices by ensuring responsive and efficient AI support with low reliance on externally available cloud resources.

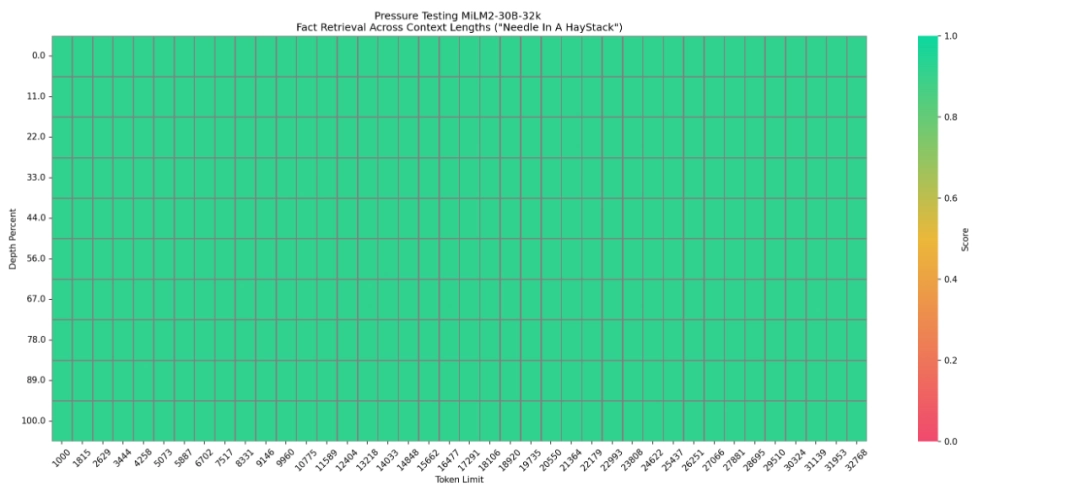

- Extended Context Window: MiLM2 has increased the context window from 4,000 tokens in the first generation to an impressive 200,000 tokens. A larger context provided by MiLM2 can manage more complex, long-form text and follow extended conversations for further enrichment of user experiences. This capability comes in handy with applications that require a lot of data processing and comprehension, such as customer service or deep text analysis.

Comprehensive test set for MiLM2: Mi-LLMBM2.0

For meeting the quality and diversity requirements of MiLM2, Xiaomi constructed a complete evaluation set named Mi-LLMBM2.0, which is composed of 10 major categories with 170 sub-test items. These categories include:

- Text Generation

- Brainstorming

- Management of dialogues

- Question-answering

- Rewriting and summarization

- Text classification

- Data extraction

- Processing code

- Safe generation of response

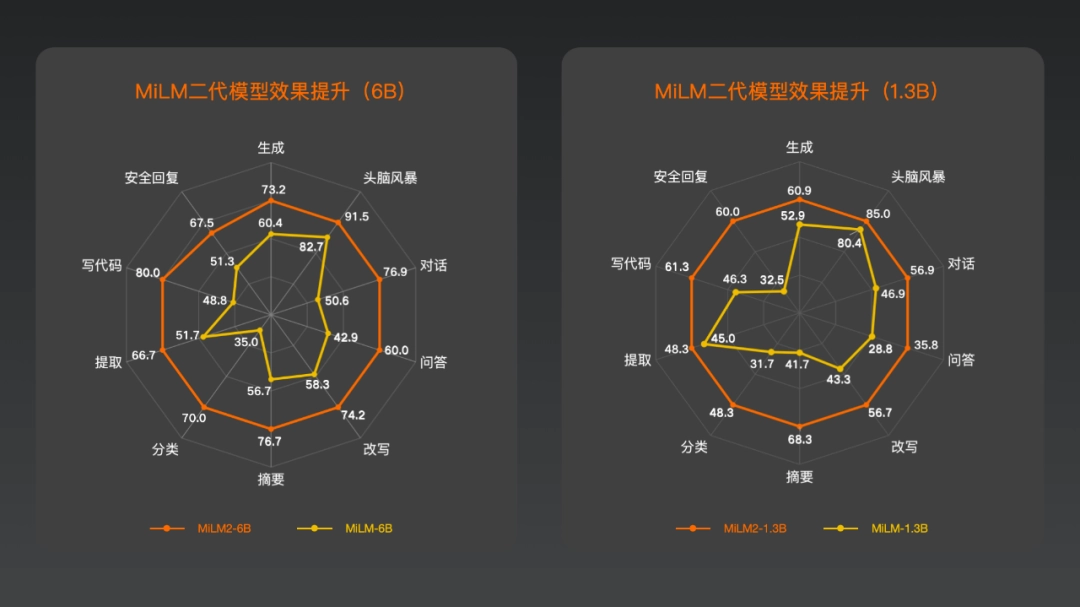

For instance, the evaluation results of MiLM2-1.3B and MiLM-2-6B show significant enhancements across all categories, considering first-generation models as baselines, which prove that MiLM2 has greatly improved in functionality and performance.

Variants of the Strategic Model: Cloud, Edge, and End Deployment

Inside MiLM2, Xiaomi has deployed an internal flexible model matrix to support diverse use cases:

- 0.3B – 6B Parameters: Most suitable for on-device or terminal use cases. Scalable to more varied devices with different hardware capabilities, allowing better performance optimization across devices. These smaller models allow them to interact more efficiently with AI on devices that have low computational capabilities.

- Models with 6B and 13B parameters: This model targets multi-tasking zero-shot learning tasks. These models do rather well for general-purpose AI functions across many Xiaomi products.

- 30B Parameters: This much more powerful cloud model can handle very complex tasks requiring high-level reasoning: multi-dimensional analysis and instruction following that are extended.

MoE Models for Efficiency and Performance

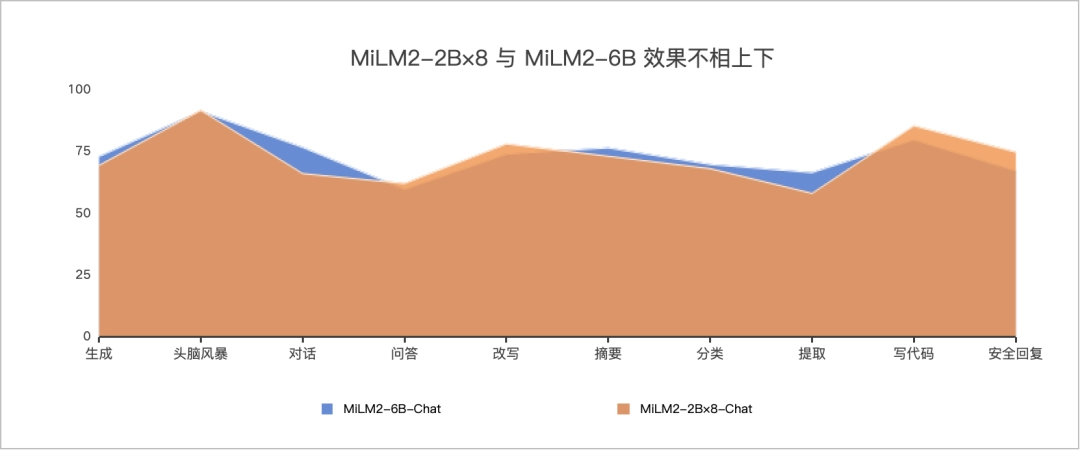

The second generation of MiLM2 introduces the MoE models: MiLM2-0.7B×8 and MiLM2-2B×8. These MoE models consist of multiple “expert” modules, each tasked with a specific function to perform. For example, the MiLM2-2B×8 model, though having a lower number of parameters, results in comparable performance to the MiLM2-6B model but with a 50% improvement in decoding speed. This architecture provides an extremely efficient model with near-negligible degradation in the model’s accuracy.

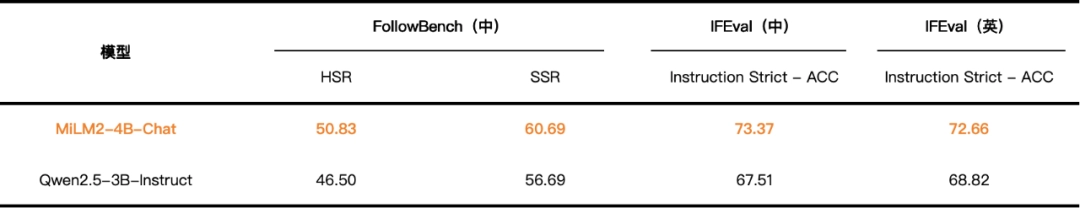

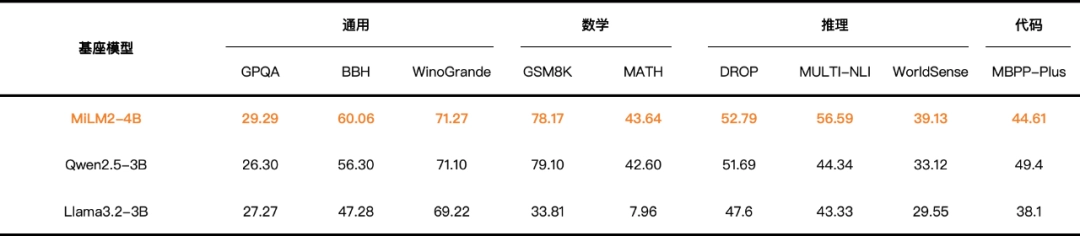

Breakthroughs of On-Device Deployment: The New 4B Model MiLM2 has gone a step further in deploying AI on the device with its new 4B model, specifically designed for client-side scenarios. Xiaomi’s “TransAct Big Model Structured Pruning Method” can prune efficiently, reducing computation effort by 92% while ensuring ultra-high accuracy. Further optimization of model performance is ensured by proprietary “end-side quantization methods” owned by Xiaomi. Such quantization from Xiaomi reduces the loss in precision by 78% compared to the solution from Qualcomm, which it uses as an industry benchmark.

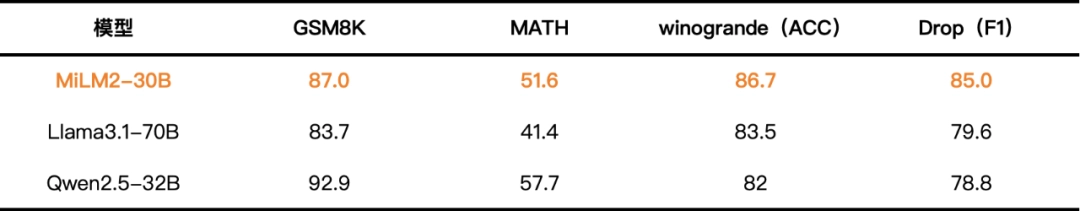

30B Model for Cloud Applications

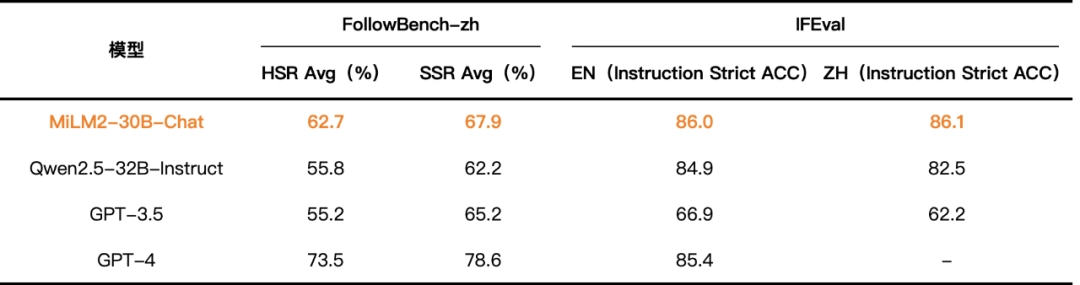

MiLM2-30B is Xiaomi’s most cutting-edge cloud-based AI model, oriented to perform high-load tasks in even more challenging environments. It has outstanding multitasking processing performance, excellent instruction compliance, profound analysis, and complex application scenarios. Also, for more complex applications, such as Xiaomi’s HyperOS, smart customer service, and the intelligent cockpit in Xiaomi vehicles.

Real-World Applications: Xiaomi’s “Full Ecosystem for People, Cars, and Homes”

Its encompassing series will be very important in a strategy termed “Full Ecosystem for People, Cars, and Homes.” An interconnected, AI-driven experience-MiLM2-will design a way of life for people using Xiaomi high-tech gadgets, starting from smartphones and smart home appliances to cars. Example scenarios might well include:

- In Xiaomi Cars: The MiLM2 improves real-time navigation, language translation, and even conversations to make the experience inside the car really comfortable.

- In Smart Homes: MiLM2 enhances the IoT devices’ control by facilitating more intuitive voice commands and adapting to the user’s preference responses. In-Mobile: empowering Xiaomi’s Xiaoai assistant with MiLM2 for better claims to execute and contextual understanding of conversation, which became absolutely crucial for making smartphones smarter and the experience even more personal.

The launch of MiLM2 is a very important milestone in Xiaomi’s AI journey. The second generation makes MiLM2 closer to realizing its vision: seamless interconnectivity throughout an intelligent ecosystem, which caters to all walks of life. Positioning MiLM2 for a broader and much stronger parameter set, inference capability, and model deployment will continue setting new standards for consumer electronics AI. Therefore, MiLM2 will be one of the grounds for further development in the field of artificial intelligence since Xiaomi proceeds with its strategy aimed at the radical creation of a super-intelligent ecosystem where people, automobiles, and homes will be organically integrated. MiLM2 opens another decade of smart life and confirms that Xiaomi does not stop in its development to move the frames of AI technology.

Emir Bardakçı

Emir Bardakçı